August is House Music Month!

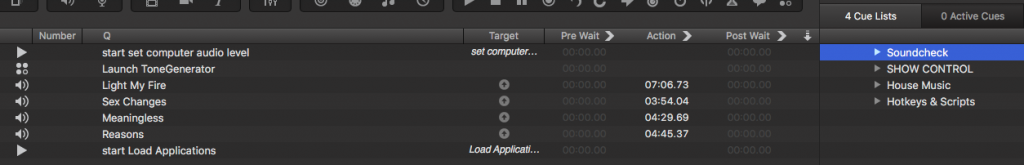

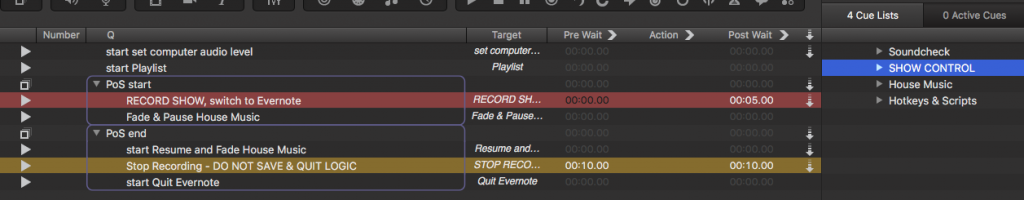

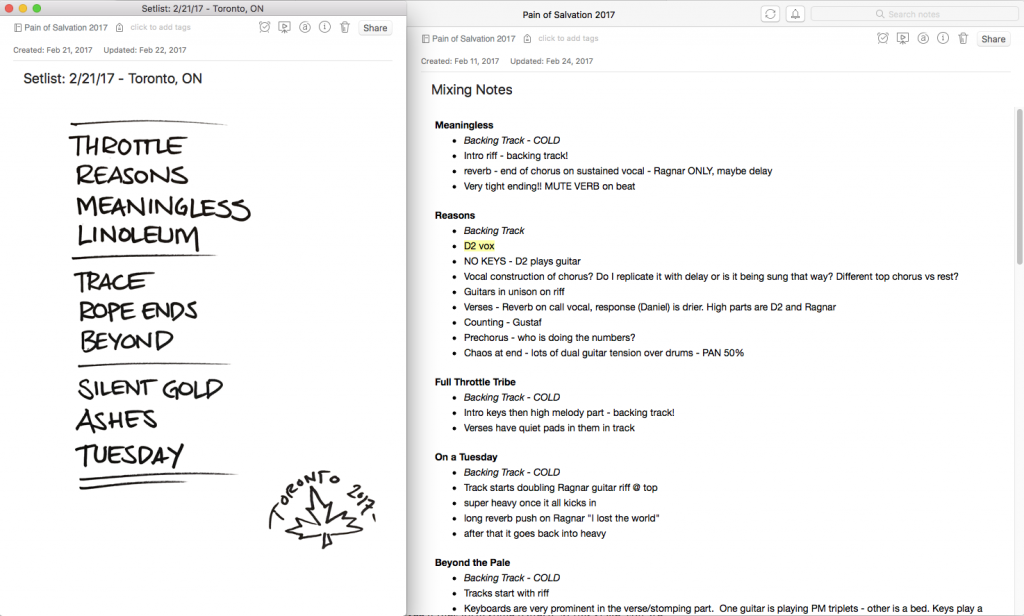

Readers of this blog know how much we at Rocktzar love the odd, esoteric parts of live production – every tutorial even has a section named as such. Our love for Qlab is also pretty obvious. Which is why we are able to share a month’s worth of posts about house music – because there is just so much to write about, especially when talking about Qlab!

This month, we are going to delve deep into how we have built and run house music for Rocktzar shows over the years – what strategies seem to work better than others, why, and how to remain flexible in the face of changing technology.

Here are the topics that we will be posting about in August’s House Music Month:

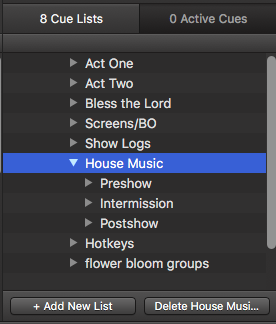

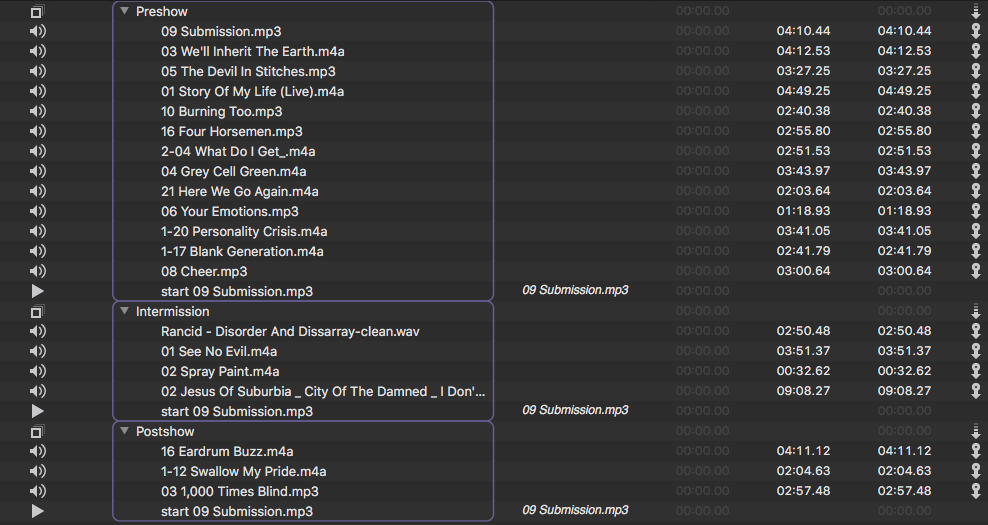

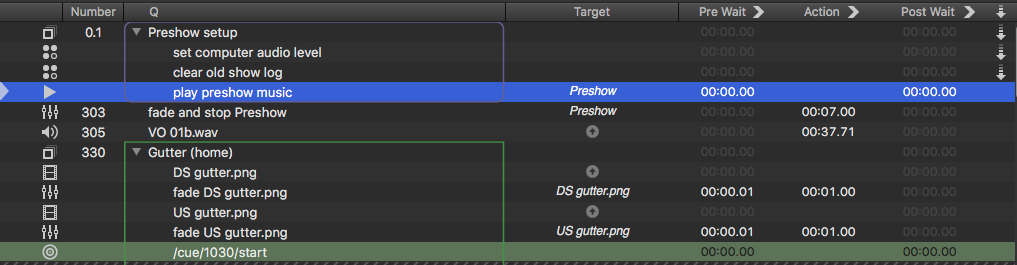

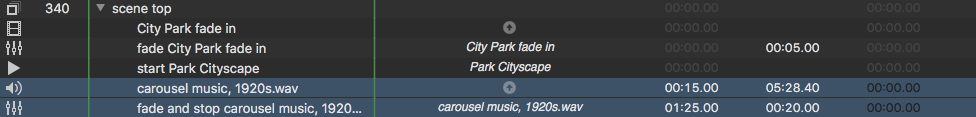

- Qlab organization – Multiple Cue Lists and House Music

- Designing house music – Qlab, CD, iTunes, or Spotify?

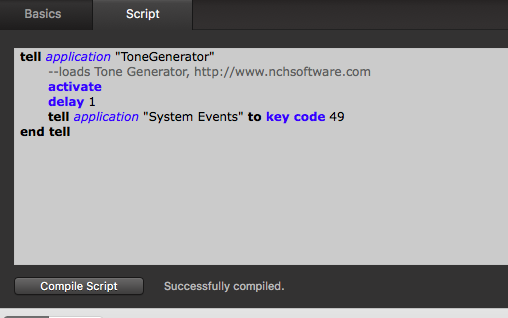

- Using Qlab to control iTunes and house music

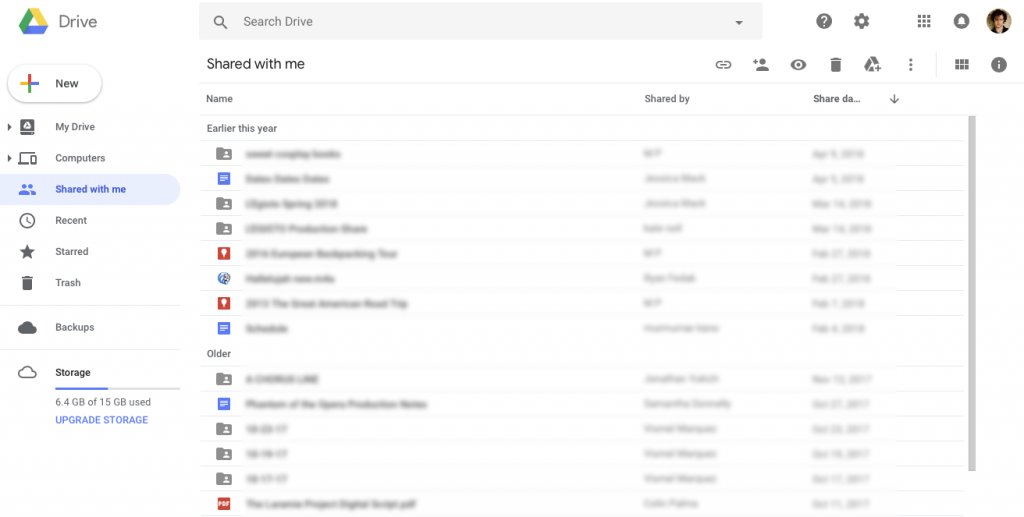

- Using Qlab to control Spotify and house music

We also have some previous posts that we reshare on social media that are on this topic: